The Slutzky-Yule effect and the risks of smoothing data

Time series, autocorrelation, and random noise

Data measured such that successive observations are indexed by time is a time series. Time series data are not restricted to any particular scale, the only requirement is that each observation be associated with a successive point in time. This distinction enforces a natural ordering to the data; time (for these purposes) is linear and any data point in such a series will have a distinct position within the series.

Treatment of time series data is affected by this ordering that a linear conception of time imparts. In the statistical analysis of non-time series data a common assumption is one of independence, any one data point is not dependent on another. However, time series data may have past values as predictors of future values. That is, there exists correlation between data points, in other words autocorrelation.

The autocorrelation between observations of a time series

The computation of the partial-autocorrelation is not as simplistic as the autocorrelation, so details are omitted. Having the sample autocorrelations and partial-autocorrelations be a function of the lag

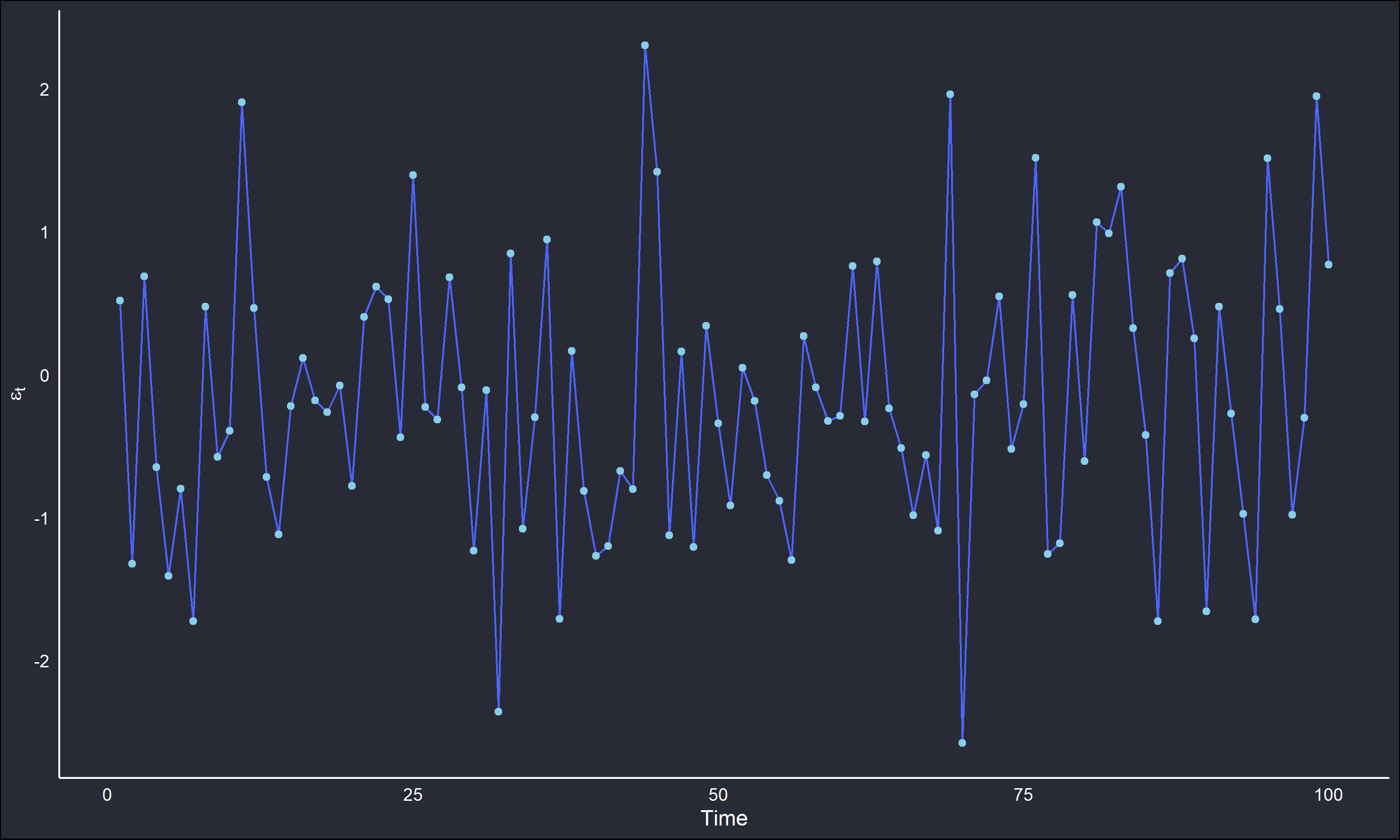

Random noise as a time series is a set of IID observations from some probability distribution. There should be no statistically significant dependency structure observed within such a series. In symbols this is modeled as,

where

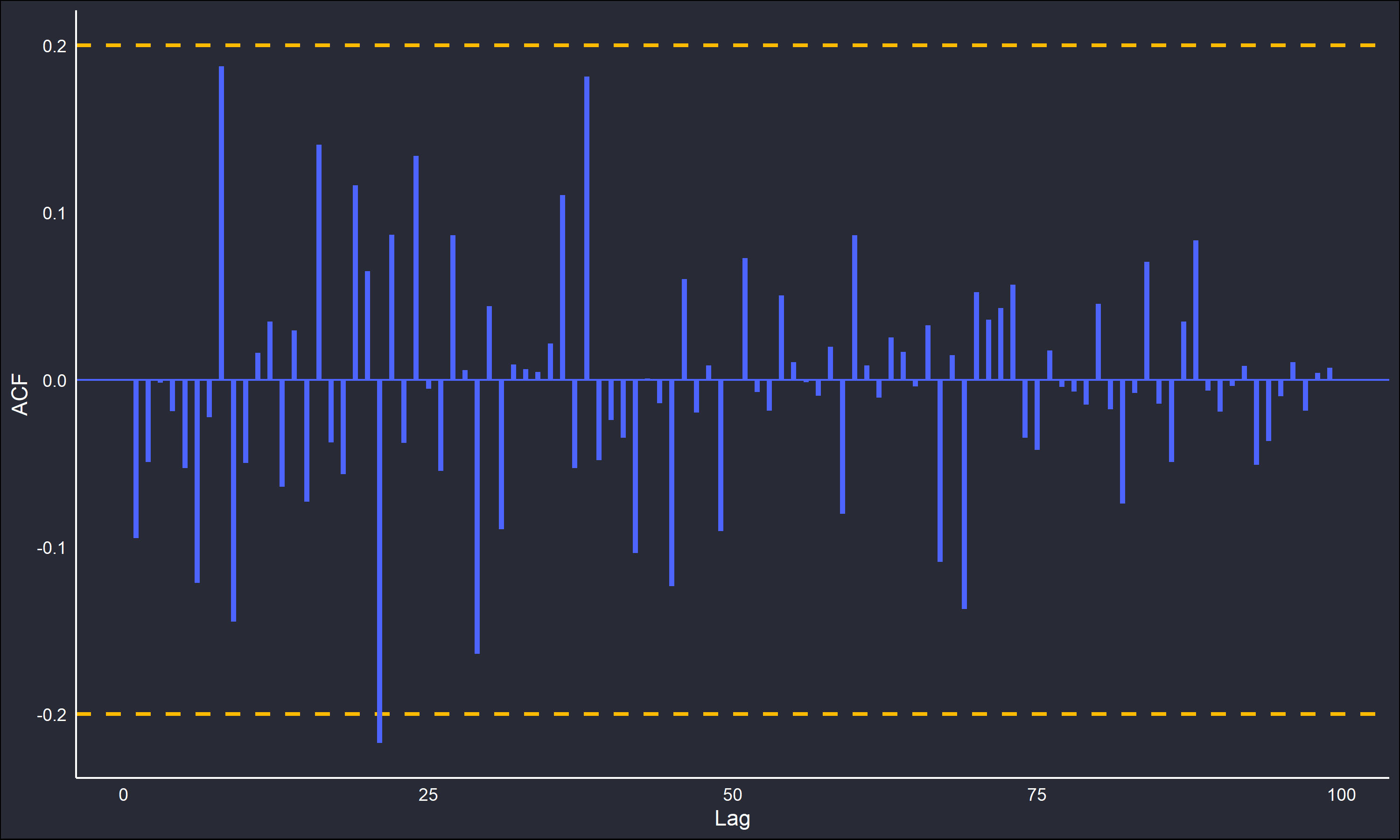

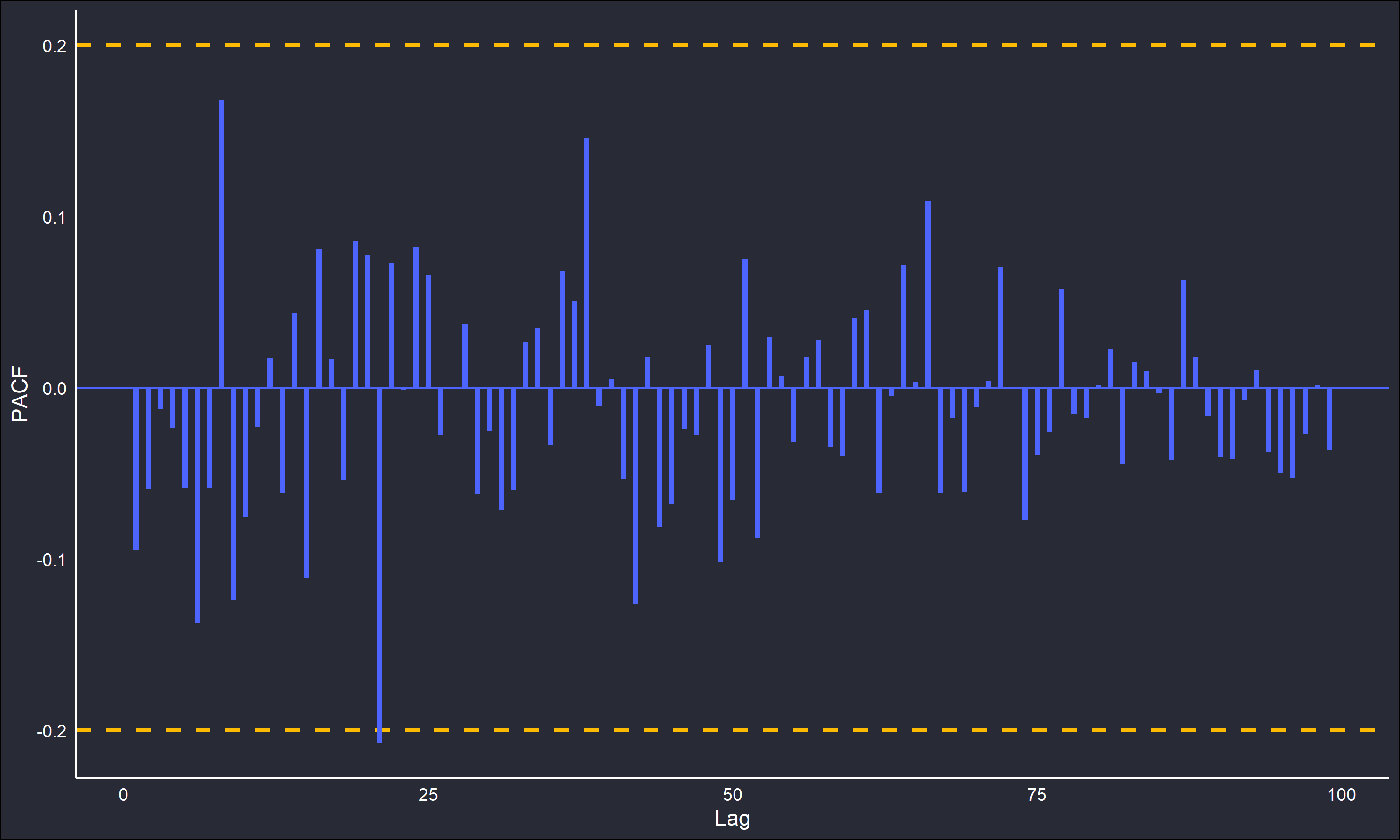

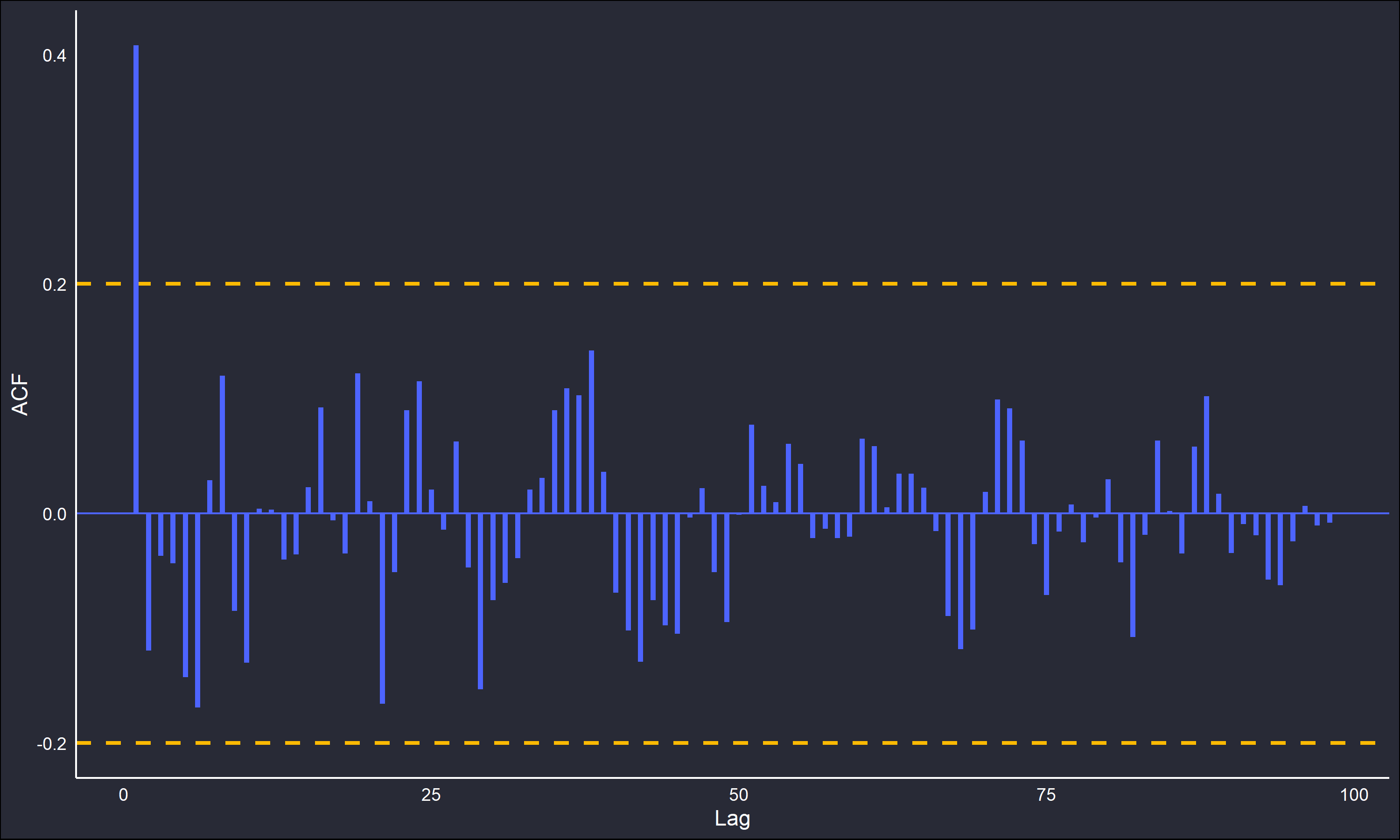

Note the stable behaviour about the distribution’s mean, there is no discernible trend or pattern that suggests dependency. Consequently, when looking at the ACF and PACF plots of the series there should be no “spikes” apparent.

The correlograms do confirm this. Note the dotted lines, these are 95% confidence intervals to better identify any dependency. A purely random IID series will have an approximate standard deviation of

Slutzky-Yule Effect

The Slutzky-Yule effect was noted when observing the behaviour of a random series of observations after applying a linear operator. It was noted that linear operators (such as a moving average) would transform the random series into a series with a dependency structure. IE common smoothing techniques would induce structure that was non-existent in the underlying data.

Consider a simple moving average across the random series

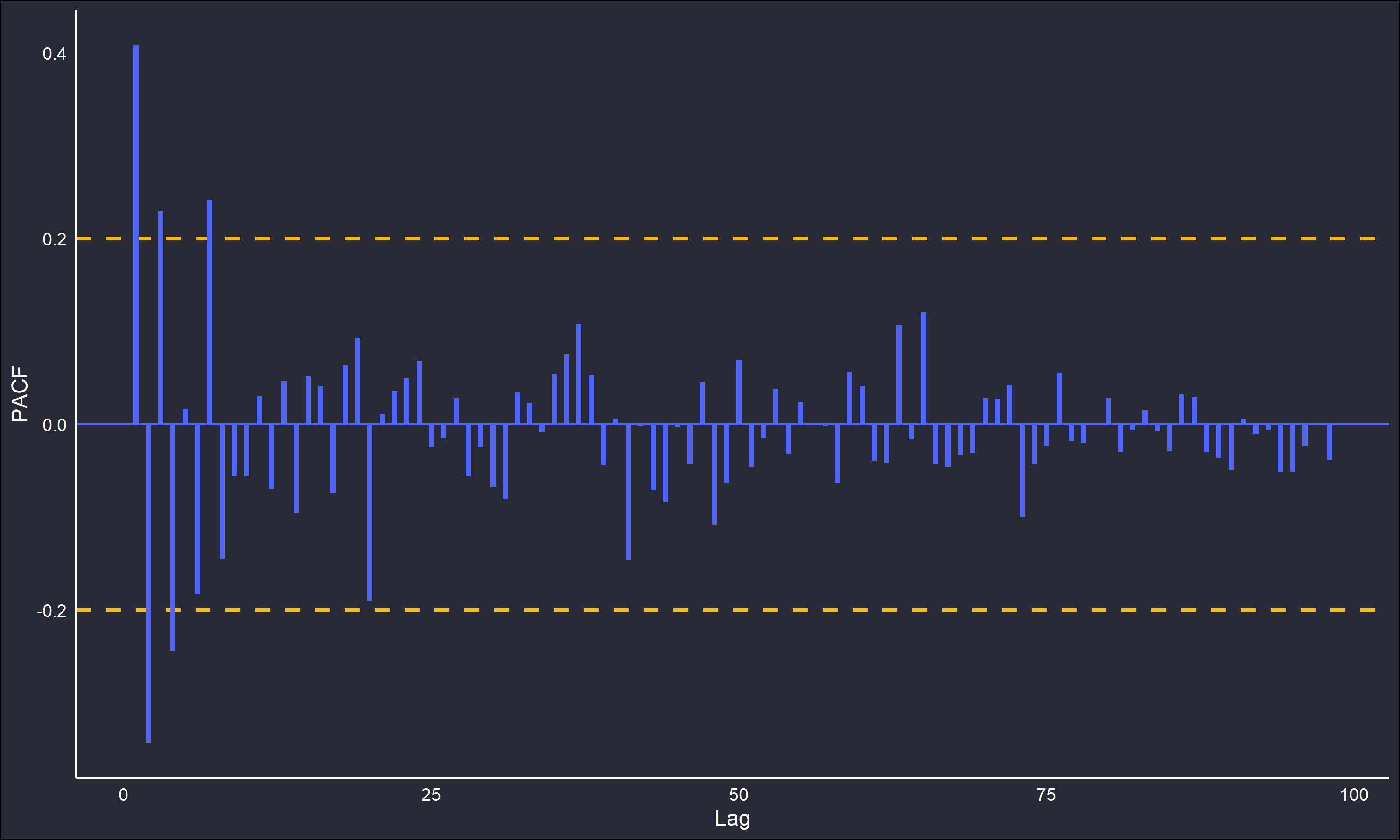

which has dependency at lag

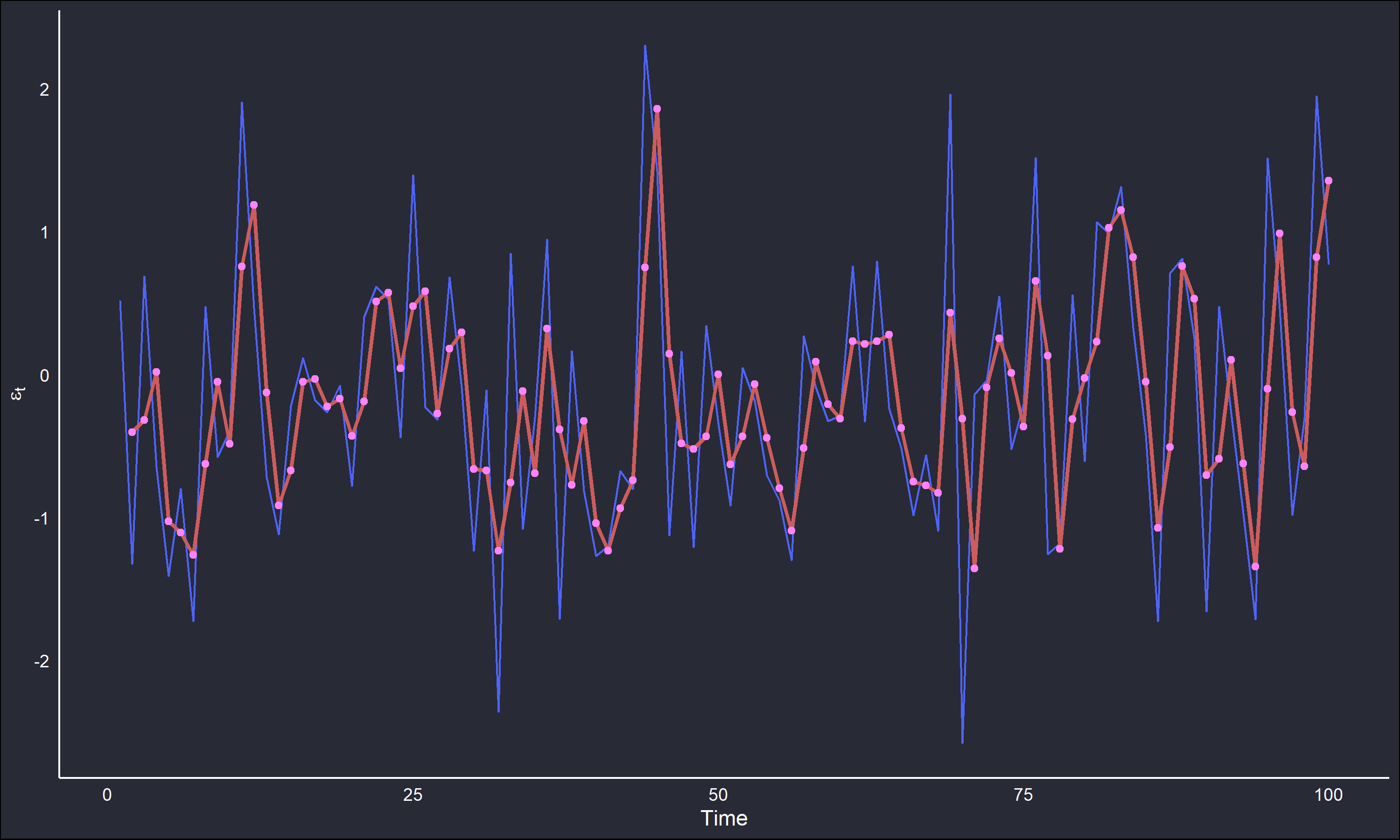

To illustrate this, a moving average of length 2 (

The correlograms show behaviour expected of a MA(1) series; the single spike at lag 1 in the ACF and the oscillating decay in the PACF are highly typical of this structure. Even with the most minimal possible averaging, a dependency structure was still induced.

The dangers of this effect are clear. Naively applying smoothing techniques to data that are strongly contaminated with random error can create an artificial structure that is misleading. The Slutzky-Yule effect illustrates the importance of having a good grasp of the data being analyzed, and the process that generates it, before making any transformations. Moving-averages and other linear filters are useful in cutting through noise and capturing underlying signals, but only when a strong enough signal is present. Otherwise you are chasing ghosts.